- MLOPS

Context

Usually, IT departments of large retailers across the globe generate a huge volume of data across the enterprise. They have mature tools employed across the enterprise which enable experimentation with new problems and solutions primarily dealing with data and discovering means to harness it and extract meaningful insights from it

Data Science can be leveraged in such situation to build predictive models that can deliver business value to their users

Use Cases

Analyse CICD

- Analyse activity data from DevOps tools to get transparency into the delivery process

- Jira, Git, Jenkins, SonarQube, Puppet, Ansible, Terraform

- Uncover anomalies in the data

- large code volumes, long build times, slow-release rates, late code check-ins

- Identify inefficiencies in the SDLC

- inefficient resourcing, excessive task switching, process slowdowns

Analyse QA

- Analyse output from testing tools

- Uncover errors

- Build a better understanding of the testing on every release, raising the quality of delivered applications

Security Analysis

- Identify anomalies that may represent malicious activity

- anomalous patterns of access to sensitive repos, deployment activity, test execution, system provisioning

- Identify users exercising ‘known bad’ patterns

- deploying unauthorized code

- stealing intellectual property

Manage Production Systems

- Analyse applications in production

- large data volumes, user counts, transactions, resource utilization, transaction throughput, etc

- Detect abnormal patterns

- DDOS conditions, memory leaks, race conditions

Manage Alerts

- Analyse high number of alerts that occur in production systems

- Detect patterns

- Known good patterns

- Known bad patterns

- Filter to better manage these alerts

- Preventing production failures

- Analysing business impact

The Approach

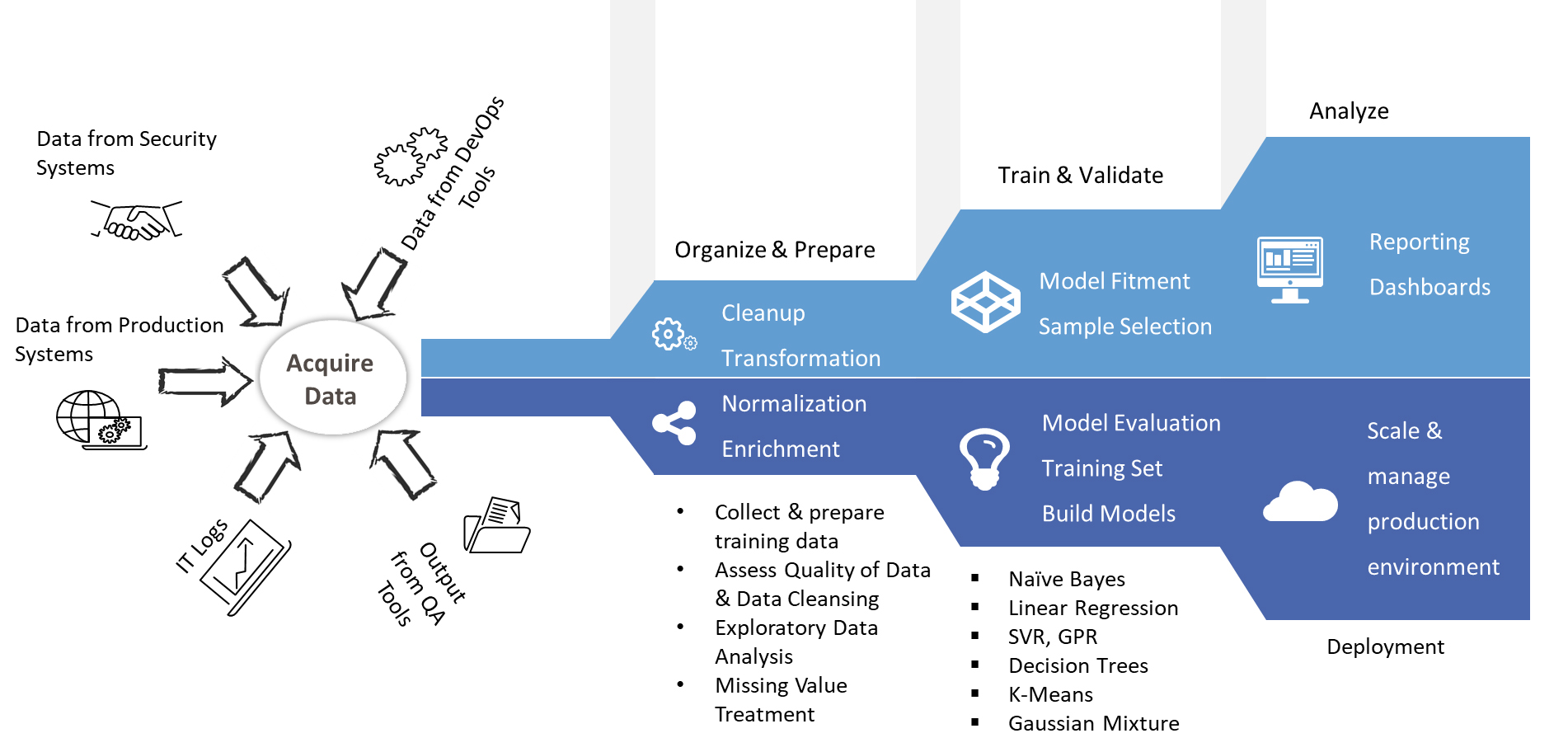

A high-level approach towards implementing such a system is illustrated below:

Key steps involved are:

- Data Source Identification – involves the identification of the relevant Data Sources across the enterprise

- Data Extraction – involves the mechanisms of getting permissions, extracting, and integrating the data from the various Data Sources

- Data Analysis – involves performing exploratory data analysis to understand the available data for building the ML model. This process leads to the following:

- Understanding the data schema and characteristics that are expected by the model

- Identifying the data preparation and feature engineering that are needed for the model

- Data Preparation – this involves preparing the data for the ML task. This preparation involves data cleaning, where you split the data into training, validation, and test sets. You also apply data transformations and feature engineering to the model that solves the target task. The output of this step are the data splits in the prepared format

- Model Training - Data Scientists implement different algorithms with the prepared data to train various ML models. In addition, you subject the implemented algorithms to hyperparameter tuning to get the best performing ML model. The output of this step is a trained model

- Model Evaluation - the model is evaluated on a holdout test set to evaluate the model quality. The output of this step is a set of metrics to assess the quality of the model.

- Model validation - the model is confirmed to be adequate for deployment—that its predictive performance is better than a certain baseline

- Model Serving - the validated model is deployed to a target environment to serve predictions

- Model monitoring - the model predictive performance is monitored to potentially invoke a new iteration in the ML process.

Conclusion

Traditional domain-based IT management solutions cannot keep up with the volume of data generated in enterprise operations

- They cannot intelligently sort significant events from the huge amount of enterprise data

- They cannot correlate data across different but interdependent environments

- They cannot provide the real-time insight and predictive analysis IT operations teams need to respond to issues fast enough to meet user and customer service level expectations

MLOps provides visibility into enterprise data and dependencies across all environments, analyses the data to extract significant events related to slow-downs or outages, and automatically alerts IT staff to problems, their root causes, and recommended solutions

- AIOps leverages Big Data, Analytics, and Machine Learning capabilities to:

- Collect and aggregate “operations” data generated by multiple IT infrastructure components, applications, and performance-monitoring tools

- Intelligently select ‘signals’ out of the ‘noise’ to identify significant events and patterns related to system performance and availability issues

- Diagnose root causes and report them to IT for rapid response and remediation—or, in some cases, automatically resolve these issues without human intervention

How can AIE Help?

AIE can strong experience in building MLOPS solutions for enterprises. By replacing multiple separate, manual IT operations tools with a single, intelligent, and automated IT operations platform, MLOPS enables IT operations teams to respond more quickly—even proactively—to slowdowns and outages, with a lot less effort.

If you are interested in developing MLOPS solutions, then contact us for a free consultation and quote